Everyone — and their cousin’s ex’s part-time barista’s childhood neighbor’s invisible friend — is talking about AI right now. So… I figured I’d jump in, too.

As a developer and an author, I’ve had some solid wins with AI tools like GitHub Copilot and ChatGPT. They’ve helped me get unstuck, write boilerplate code faster, and even explain some arcane APIs in ways that actually made sense.

But I’ve also had some massive, frustrating failures — we’re talking 40 to 80 hours of work completely wasted — chasing solutions that were confidently wrong.

In one particularly painful case, I was a little fuzzy on the problem I was trying to solve. I turned to AI for help, and it confidently led me in the exact wrong direction. For days. I didn’t realize it until I had already built a house of cards around a flawed idea.

That experience made me stop and ask:

When is AI actually helpful?

And when is it risky enough that I should just step away from the chatbot?

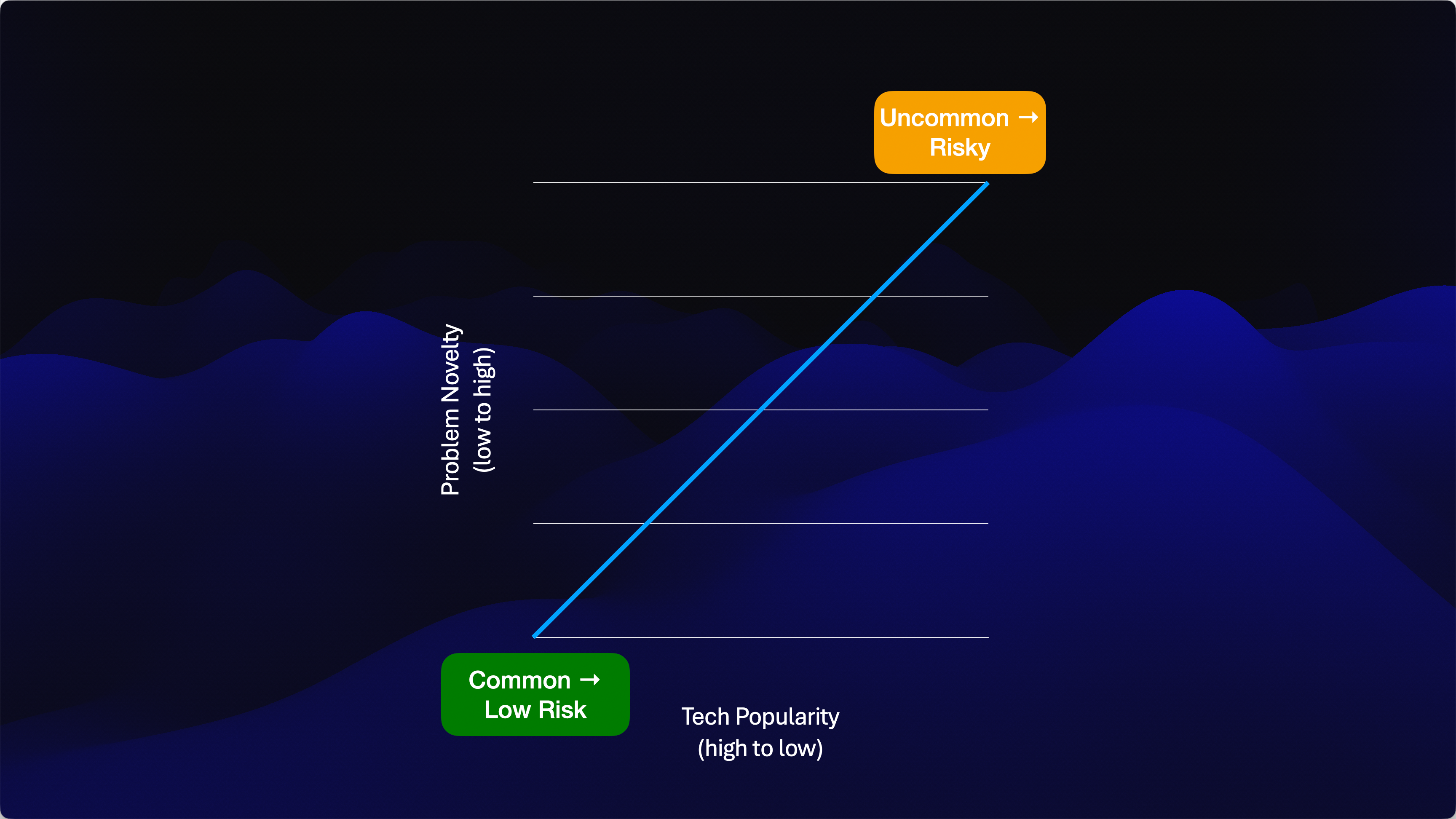

The “AI Applicability Matrix”

To help make sense of this, I started sketching what I’m calling the AI Applicability Matrix. Think of it as a cousin to the Stacey Complexity Matrix — except instead of guiding project delivery strategies, it helps you decide when you can safely trust AI.

Or maybe it should just be called the “Should I Trust AI?” Grid.

Either way, this framework looks at two axes:

- Problem Novelty – How unique or specific is your problem?

- Tech Popularity – How common or well-documented is the technology you’re working with?

Tech Popularity

Start by asking:

Is the technology you’re using mainstream?

Are there lots of blog posts, Stack Overflow answers, GitHub examples, and good documentation?

If yes, AI likely has a solid understanding of it. Copilot and ChatGPT have been trained on tons of public examples and resources — so they’re more likely to give you accurate, helpful answers.

But if you’re working with something niche or new — like a bleeding-edge library or a poorly documented API — you’re in riskier territory.

Problem Novelty

Next, consider the problem you’re solving.

Is it a common use case that lots of developers encounter?

Or is it something specific to your business domain or team?

AI tends to do well when it can recognize patterns and recall examples it has seen before. So if your problem is generic — say, building a calculator or setting up a basic CRUD API — you’re golden. But if your challenge is specific to your architecture or domain logic, things can get fuzzy fast.

Putting It Together

Here’s how it breaks down:

- Low Novelty + High Popularity: You’re in the AI sweet spot. Go ahead — lean on ChatGPT or Copilot. It’ll probably save you a bunch of time.

- High Novelty + Low Popularity: Danger zone. AI might sound confident, but its advice could be totally off-base. Use it sparingly, and validate everything.

- Middle ground: Use your judgment. If AI’s output starts to smell off, trust your gut and dig deeper.

A Real Example

Let’s say you’re building a simple calculator app in Angular. That’s a well-understood tech stack solving a common problem. AI’s going to crush that.

But if you’re trying to use Bicep templates to deploy and configure a custom VM image into Azure and manage it with VM Scale Sets? That’s a pretty niche combo. AI might hallucinate, or worse, lead you confidently down the wrong path.

Summary

AI isn’t magic. It’s a powerful assistant — but only when it has enough context to be accurate. The trick is knowing when you’re in “safe territory” and when you’re likely to get burned.

That’s what the AI Applicability Matrix is for: a quick gut-check to decide how much trust to place in your AI copilot.

Curious what you think.

Have you been led astray by AI? Or had a win that saved your bacon?

Let me know.